In the data science landscape, there is a lot of buzz around the latest Deep Learning discoveries, and AI use cases keeps growing. But there is a domain that makes less noise but is just as important: Data Anonymization. To draw a realistic portrait of the situation, this field of research is neglected by data scientists. We only have to note the small number of scientific articles on the subject.

Why this area would be so important? The answer is simple: If the data is not protected by a systematic process, the sustainability of the business is at stake. It is essential for a company to ensure that its data is resistant to malicious intrusions.

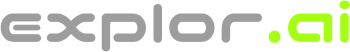

It is in this context that we introduce differential privacy. Its difference with encryption is major. With the latter, it is possible to know the real data if we are in possession of the encryption key.

The first scenario is an example of the encryption process (information on the left columns are transformed). The individual in possession of the key can still transform the encrypted data into its original form. The problem arises if a malicious person gets access to the key.

The second example is an application of differential privacy. This process transforms data in one direction, it is not a reversible process. Coupled with good encryption, this process brings great security for your organization and is an essential step in data governance.

Several aspects have not been covered: Is there an impact on machine learning accuracy? Are there different ways of transforming and protecting data sets? These are topics of upcoming articles !

If you have any question, feel free to reach us!

The research done on anonymization has been made possible thanks to the support of Mitacs through their Business Strategy Internship program.